In the first installment of this two-part series, we examine gaps in current reference data collection methods for use in positional accuracy assessments of geospatial data and products, and what is needed to address those gaps for the geospatial community. In part two, we’ll take a look at the work GCS Geospatial is doing in this area, and our next steps to advance this work forward.

Addressing inadequate and unsuitable reference data

Positional accuracy assessment of remotely sensed data and other geospatial data or products is a crucial part of Independent Verification and Validation (IV&V), ensuring that defense missions and other customers understand the accuracy and margin of error or uncertainty of these data and products, and can determine their suitability for specific missions and applications. Just as a thermostat should be checked against the accuracy of a traditional (reference) thermometer or a piano tuned to ensure a true note, geospatial data must be checked against robust reference, or “ground truth,” data to assess its accuracy.

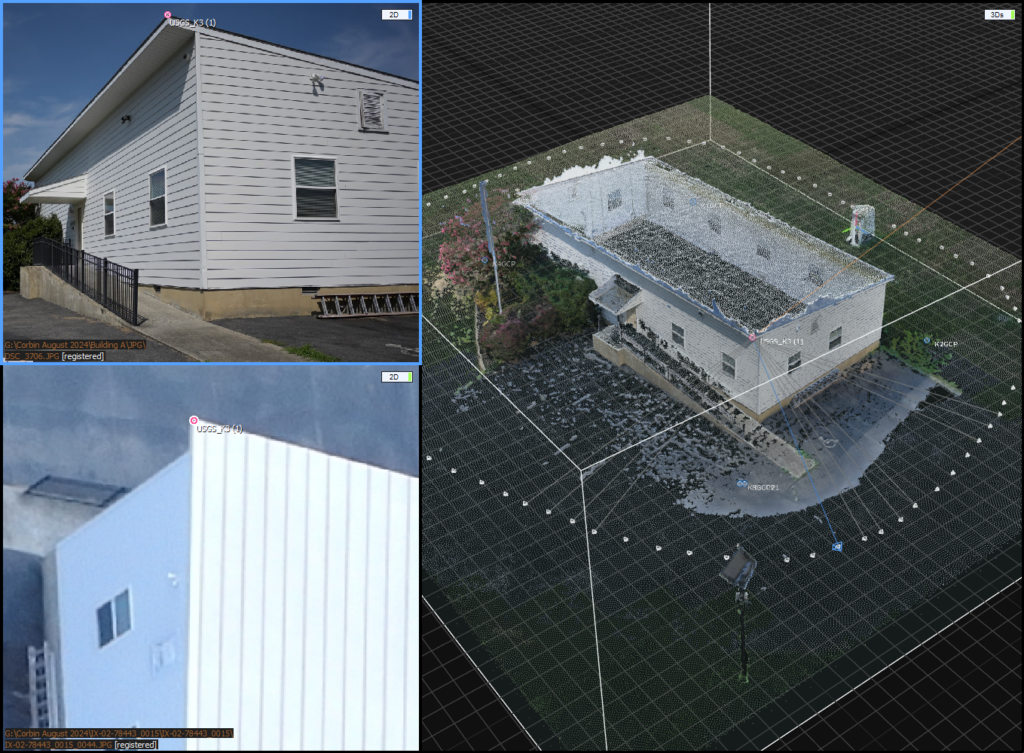

Take for example a lidar-based product that promises to build highly accurate terrestrial 3D models showing the location of specific features of buildings and tree canopy within a 1-meter margin of error. Missions with a need for precise location accuracy for specific features will select technologies based on such claims, therefore independent and unbiased third-party validation with a focus on accuracy, objectivity, and verification against ground truth data is crucial.

Understanding current limitations in geospatial reference data

Despite its importance, the geospatial community often suffers with inadequate reference data for use in positional accuracy assessments. This has historically been true and is now exacerbated by enormous volumes of readily available 2D and 3D data and a growing number of associated products. These data include imagery, digital surface models (DSM), digital terrain models (DTM), point cloud data, 3D mesh data, and other derivative geospatial products.

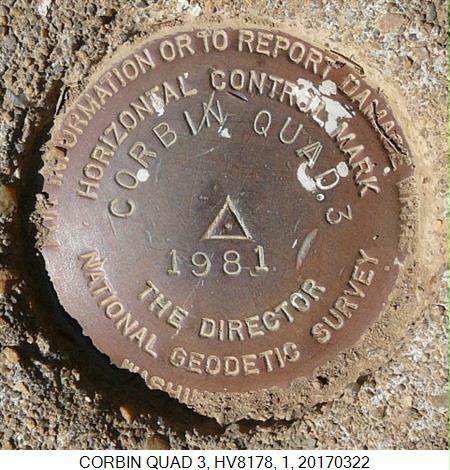

Existing reference data (e.g., ground control point networks) are often collected for a different purpose than for use as ground truth and only in certain types of terrain, limiting true understanding of positional accuracy of geodata sources and products. These data also are often inadequate in quantity and quality. While conventional survey methods can be used to augment or collect new ground control networks, these can be time- and cost-prohibitive. Lidar is widely available from sources such as the USGS, however using it as reference data for positional accuracy assessments is problematic in many cases again due to a lack of available ground imagery (and the time and cost challenges of additional ground surveys) necessary to provide context for features of interest.

The path forward to address gaps in ground truth

When reference data is inadequate and/or unsuitable, those who need robust assessments of positional accuracy face the risk of casual assessment reporting, which reduces confidence in the accuracy of those assessments. This challenge makes clear that the geospatial community needs to go beyond the use of suboptimal reference data and less-than-definitive accuracy statements. The community must move toward more cost effective and specifically tailored control data collection that supports objective, quantitative positional accuracy assessments of the extensive 2D and 3D products widely used today. Fortunately, many of the same technological advancements that have led to the explosion in the availability of remotely sensed geodata can be applied to enabling a more cost effective and automated approach to reference data collection and accuracy assessments.

In part two of this series, we’ll take a look at the work GCS Geospatial is doing to leverage these advances and address the needs of the geospatial community and its customers.